Join the Cilium Slack

Cilium is an open source project that anyone in the community can use, improve, and enjoy. We'd love you to join us on Slack! Find out what's happening and get involved.

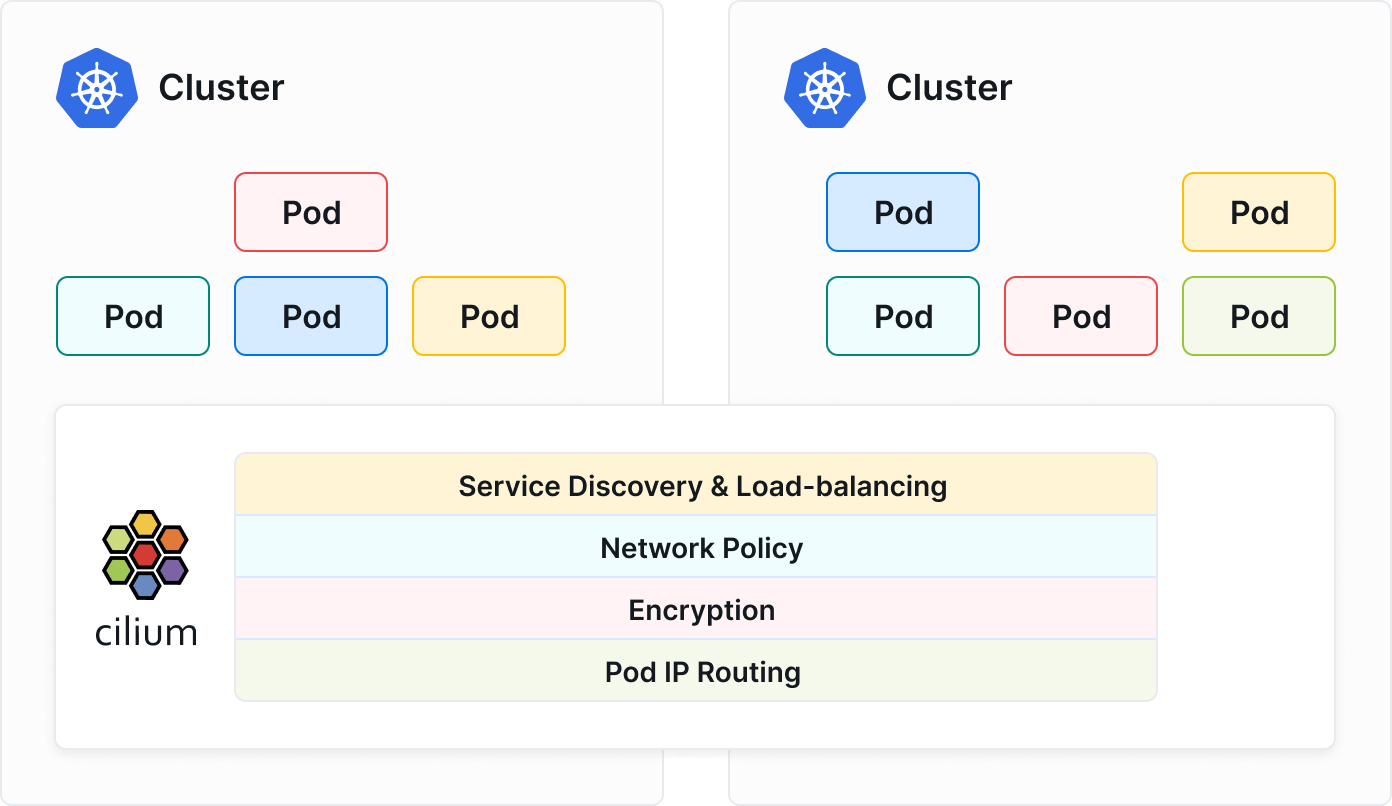

Join the SlackMulti-cluster Kubernetes setups are often adopted for reasons like fault isolation, scalability, and geographical distribution. This approach can lead to networking complexities. Traditional networking models, in this context, struggle with service discovery, network segmentation, policy enforcement, and load balancing across clusters. Additionally, managing security protocols and policies across multiple environments can be a challenging endeavour due to the distributed nature of services.

Cilium Cluster Mesh allows you to connect the networks of multiple clusters in such as way that pods in each cluster can discover and access services in all other clusters of the mesh, provided all the clusters run Cilium as their CNI. This allows effectively joining multiple clusters into a large unified network, regardless of the Kubernetes distribution or location each of them is running.

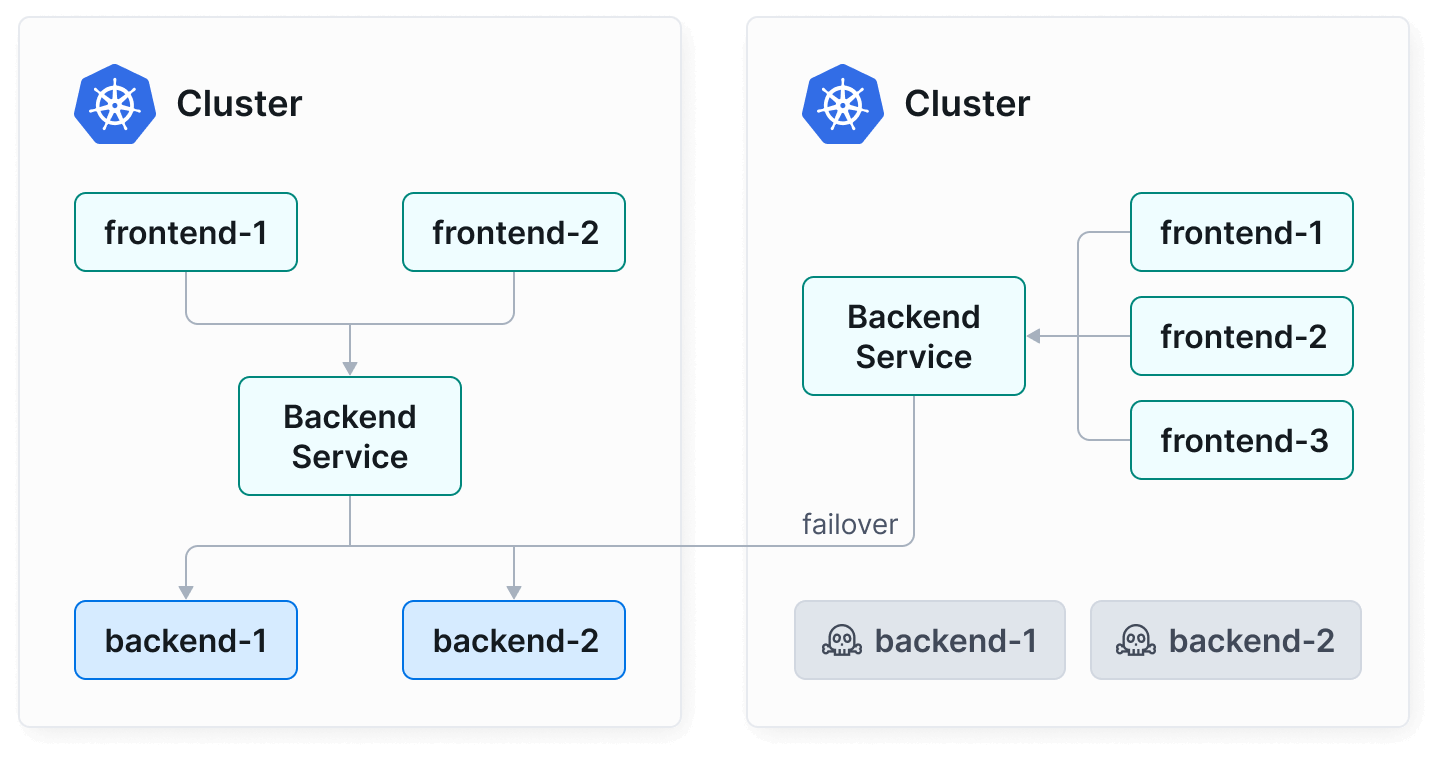

Cluster Mesh enhances your services high availability and fault tolerance. It supports the operation of Kubernetes clusters in multiple regions or availability zones. If resources become temporarily unavailable, are misconfigured in one cluster, or offline for upgrades, it enables failover to other clusters, ensuring your services remain accessible at all times.

Cluster Mesh automates service discovery across your Kubernetes clusters. Using standard Kubernetes services, it automatically merges services with identical names and namespaces across clusters into a global service. This means your applications can discover and interact with services, irrespective of the cluster they reside in, greatly simplifying cross-cluster communication.

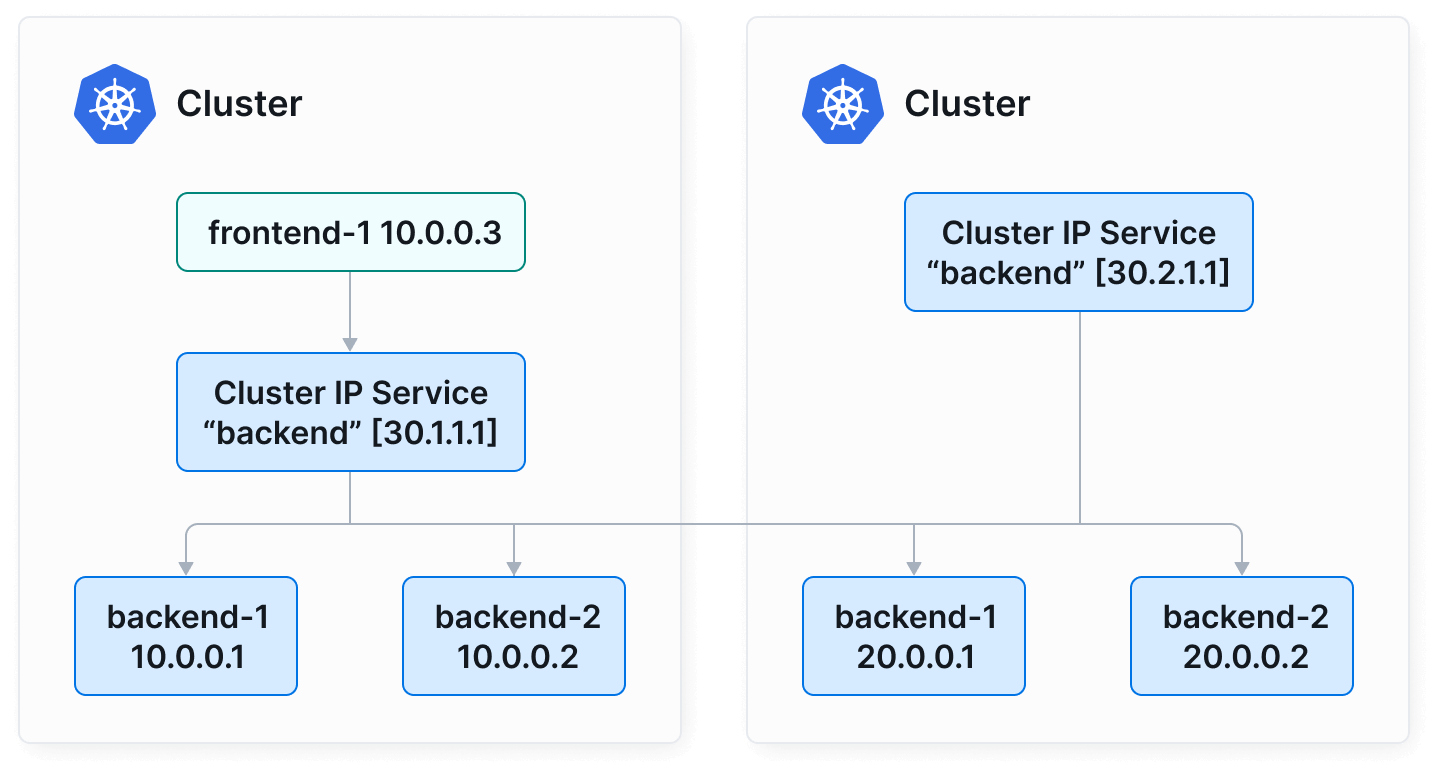

Cluster Mesh is able to handle Pod IP routing across multiple Kubernetes clusters at native performance. By using either tunneling or direct-routing, it circumvents the need for any gateways or proxies. This allows your pods to communicate across clusters seamlessly, enhancing the overall efficiency of your microservice architecture.

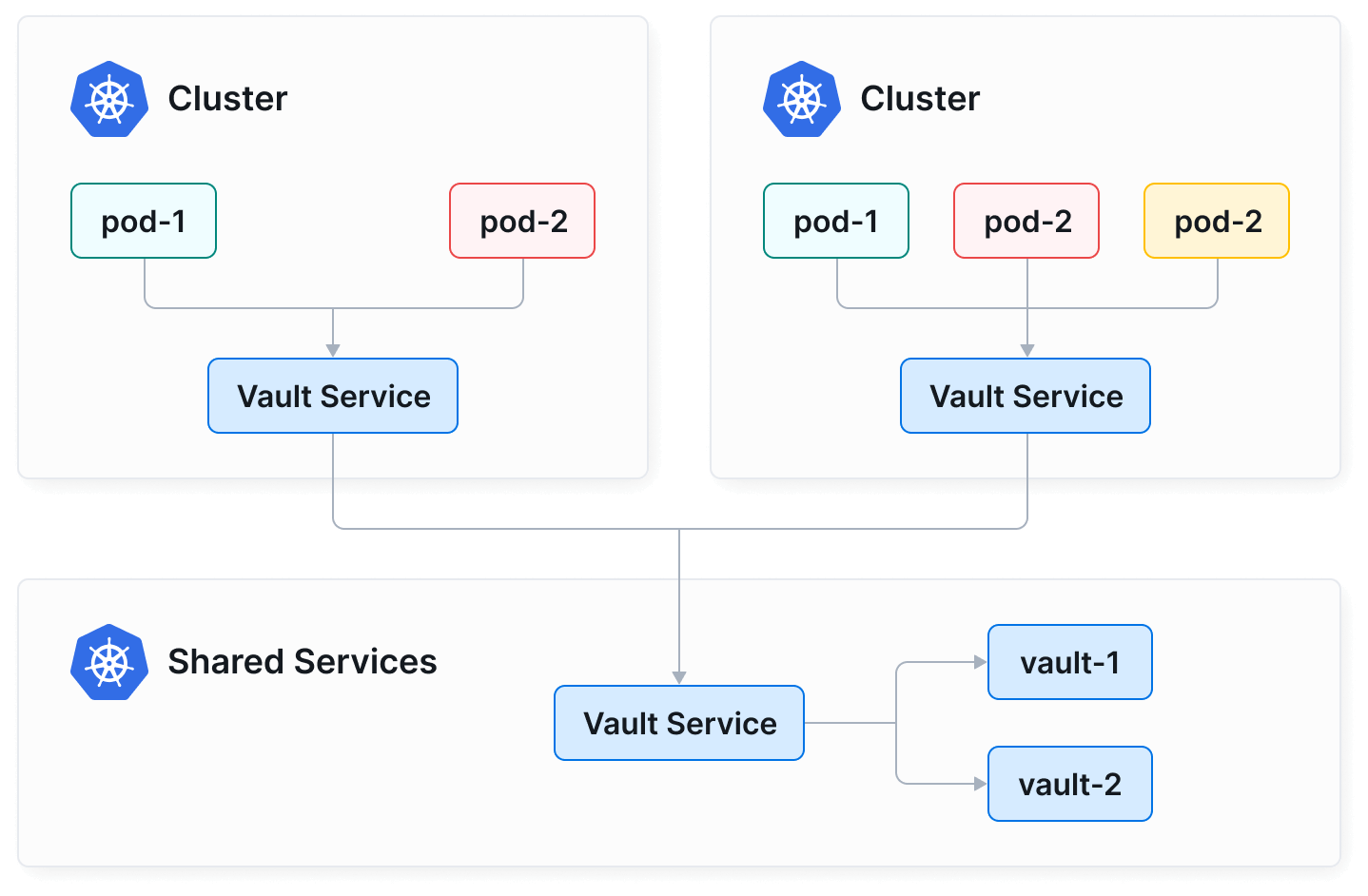

Cluster Mesh enables sharing of services such as secrets management, logging, monitoring, or DNS between all clusters. This reduces operational overhead, simplifies management, and maintains isolation between tenant clusters.

Cluster Mesh extends Cilium's Layer 3-7 network policy enforcement to all clusters in the mesh. It standardizes the application of network policies, ensuring a consistent security approach across your entire Kubernetes deployment, irrespective of the number of clusters involved.

We have payment services deployed on GCP, Azure, and AWS on their managed Kubernetes offerings. Due to regulatory requirements, we also have FPS services deployed on bare metal Kubernetes clusters across two data centers clustered using Cilium Cluster Mesh. Our engineers only need to support and understand only one technology. Cilium is the company standard for networking and security, it has allowed us to secure our environment and gives us the possibility to merge our cloud services in the future.

Adelina Simion - Technology Evangelist, Form3

Watch The TalkWe didn’t plan to use ClusterMesh in the beginning, but we later realized that we have another great feature that we leverage for Ingress.

Timur Solodovnikov, SRE, ClickHouse

Read The Blog PostCilium Cluster Mesh configuration was easy, requiring only basic VPC peering, routing tables, and firewalling setup. We directed Cilium to the etcd endpoints in the other clusters, but it now supports CRD-backed IPAM mode. We have deployed Cilium in over 20 Kubernetes production clusters, with at least 3 clusters per game in the same configuration. This infrastructure handles over 50k client requests per second and supports millions of daily active users.

Luan Guimarães - Site Reliability Engineer, Wildlife Studios

Read The Blog PostCilium is an open source project that anyone in the community can use, improve, and enjoy. We'd love you to join us on Slack! Find out what's happening and get involved.

Join the SlackCilium has extensive documentation that covers its features and use cases. The docs also features tutorials for common user stories.

Read the DocsGet help with Cilium through Slack, Github, training, support, and FAQs. The community can also help you tell or promote your story around Cilium.

Get Help